Having recently started work on the Udacity MiniProject #1 from the Intro to Machine Learning course, what again started as a simple verification that all the python code and libraries worked ended being an interesting dive into handling text data and validating results. The MiniProject uses a subset of the Enron email corpus to determine the email author id accuracy. (The Enron corpus is famous and used in a lot of different ways. I was made aware of it in my previous work-life through the use of Guidance Software's EnCase eDiscovery suite that removed duplicate email conversations to show only the unique data. Cool stuff.)

The MiniProject basic work is in the email_preprocess.py. Three interesting things are done here using the sklearn libraries:

- A routine which takes in the original data and spits out training and test data:

features_train, features_test, labels_train, labels_test = cross_validation.train_test_split(word_data, authors, test_size=0.1, random_state=42) - Conversion of text data into numbers and removal of high frequency words (like the, a, an etc.) that are found in all the classes:

vectorizer = TfidfVectorizer(sublinear_tf=True, max_df=0.5, stop_words='english') - Reduction of features (i.e. how many words to check against) to reduce the memory footprint:

selector = SelectPercentile(f_classif, percentile=10)

selector.fit(features_train_transformed, labels_train)

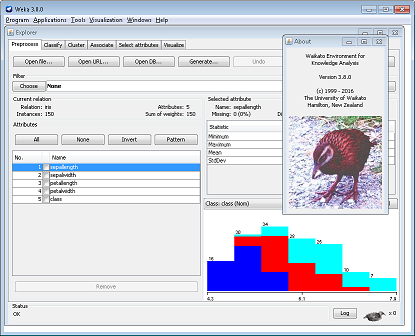

Somethings I wish to code the Java for myself but at other times I have a clear understanding of an a algorithm/class function so it would sense to leverage what has already been coded. In this case I was researching Java versions of the sklearn Cross Validation routines and stumbled across two new frameworks.  One is older, the java.ml by Thomas Abeel. The other is Weka, from the Unversity of Waikato in New Zealand. Weka is a complete machine learning framework that can be incorporated into your own java code, run at the command line, or in a GUI (run class

One is older, the java.ml by Thomas Abeel. The other is Weka, from the Unversity of Waikato in New Zealand. Weka is a complete machine learning framework that can be incorporated into your own java code, run at the command line, or in a GUI (run class weka.gui.main in Java). I'm surprised I haven't found Weka before but I guess with all of the noise from looking at ML and Naïve Bayes routines it slipped through.

The ability of Weka to run everything from one location is amazing. Choose the data source, choose a filter, choose the type of machine learning algorithm and out pop the results. It is a very quick way of validating my earlier results.

My next step if to verify the python data in Weka. The MiniProject divides the data into two pieces, the label (i.e. which class it belongs to, from file: email_authors_pkl):

aI0

and the data (from file: word_data.pkl, the line number, which appears to be ignored and is only for human reference, followed by the line of data):

p1

aS' sbaile2 nonprivilegedpst 1 txu energi trade compani 2 bp capit energi fund lp may be subject to mutual termin 2 nobl gas market inc 3 puget sound energi inc 4 virginia power energi market inc 5 t boon picken may be subject to mutual termin 5 neumin product co 6 sodra skogsagarna ek for probabl an ectric counterparti 6 texaco natur gas inc may be book incorrect for texaco inc financi trade 7 ace capit re oversea ltd 8 nevada power compani 9 prior energi corpor 10 select energi inc origin messag from tweed sheila sent thursday januari 31 2002 310 pm to subject pleas send me the name of the 10 counterparti that we are evalu thank'

One thing you'll notice about the line of data is that some words are missing their final letters. I have yet to determine why this was done or if it appears like this in the original corpus.

Using Weka for verification the goal but I've hit a roadblock in converting the MiniProject files into ARFF files. As the data is converted into numbers it isn't clear to me exactly how the fit is performed so it is has been difficult to manually transpose the files into an ARFF format. On nice thing about Weka is that it can convert many types of formatted data files into the ARFF but I'm stumped at this point how to make the conversion. I'll do another post when I've figured that out.